Exposing minikube to external traffic using docker and nginx

Introduction

Things you will need to follow the steps.

- Working vm instance and know it’s ip address

- Working installation of Minikube

- Working installation of docker and some knowledge of how it works

Motivation

Kubernetes is a really amazing piece of software and as I was studying it, I didn’t want to keep relying on the cloud providers to do the testing.

The next solution would be to rollout my own cluster. Though, I also didn’t want to setup a fully operational cluster. A single node, lightweight setup would do the job, that’s when I got my attention to minikube.

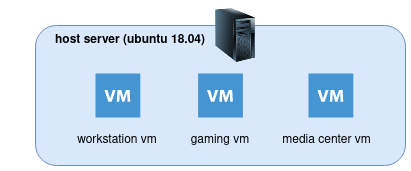

As of now, my current infra works virtualized. The host machine runs ubuntu 18.04 and every individual environment will run in a vm using kvm/qemu with device passthrough as follows:

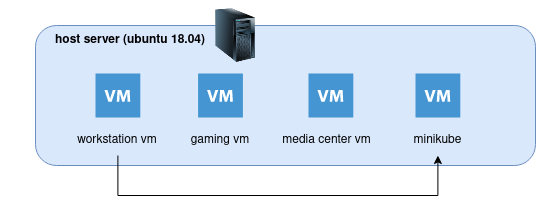

To make use of that structure, I would have to create a new vm and install minikube on it. I would also want to be able to access it externally using kubectl.

That kind of need had some caveats that I describe now:

The minikube instance is not meant to be used on production:

minikube is local Kubernetes, focusing on making it easy to learn and develop for Kubernetes.

https://minikube.sigs.k8s.io/docs/start/

So, it should be used to run a cluster on a secure environment. Preferably on a local deploy. That’s not what I wanted. I wanted it to behave as a cluster that I could access it remotely. Though, in a secure way.

minikube uses the concept of drivers, and I’ve considered some:

- none: runs on bare-metal

- docker: is the default driver

- ssh: will connect to the minikube cluster through ssh

As I am already running the qemu/kvm on the host, I could’ve used the kvm2 driver, though, I didn’t wanted to install minikube on the host machine and wanted to keep the environments independent.

Between pros and cons for my scenario, I’ve opted to run it using the docker driver.

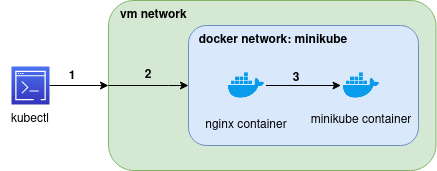

Using the default docker driver, will keep the minikube instance inside the vm machine, and I wanted to access it, using kubectl, outside of the vm, something like this:

And that’s the story, let me describe the steps.

😀

Start minikube

Before starting minikube, we need to find one information. The vm ip’s address. That will be very important it will be used to setup the minikube and also to connect using kubectl to the minikube, it will use a signed certificate to allow ip addresses.

The next step will be to configure minikube to embed the certificates into the kubeconfig file, and we can do that by:

$ minikube config get EmbedCerts true

Proceeding, to start minikube so we can use it externally you will need to issue the command (don’t forget the ip address of the vm):

minikube start --cpus 3 --memory 3024 --apiserver-ips=192.168.88.174

- –apiserver-ips: is used to sign the certificate and allow remote connection using kubectl

In case you don’t use the –apiserver-ips parameter, you will receive the following error:

Unable to connect to the server: x509: certificate is valid for 192.168.49.2, 10.96.0.1, 127.0.0.1, 10.0.0.1, not 192.168.88.174

If everything turns out ok, you should see something like this:

$ minikube start --cpus 3 --memory 3024 --apiserver-ips=192.168.88.174

😄 minikube v1.23.2 on Ubuntu 18.04 (kvm/amd64)

✨ Automatically selected the docker driver

❗ Your cgroup does not allow setting memory.

▪ More information: https://docs.docker.com/engine/install/linux-postinstall/#your-kernel-does-not-support-cgroup-swap-limit-capabilities

🧯 The requested memory allocation of 3024MiB does not leave room for system overhead (total system memory: 3940MiB). You may face stability issues.

💡 Suggestion: Start minikube with less memory allocated: 'minikube start --memory=3024mb'

👍 Starting control plane node minikube in cluster minikube

🚜 Pulling base image ...

🔥 Creating docker container (CPUs=3, Memory=3024MB) ...

🐳 Preparing Kubernetes v1.22.2 on Docker 20.10.8 ...

▪ Generating certificates and keys ...

▪ Booting up control plane ...

▪ Configuring RBAC rules ...

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 Enabled addons: default-storageclass, storage-provisioner

💡 kubectl not found. If you need it, try: 'minikube kubectl -- get pods -A'

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

One point to address is that when you didn’t provide the driver parameter it will automatically check, and in my case, it runs the docker driver. If you have problems finding automatic driver, maybe your user don’t have access to docker.

Give it a check, and fix it:

$ groups

minikube adm cdrom sudo dip plugdev lxd docker

If the user you are using is a regular user, it should be on docker group.

Exposing minikube using docker and nginx

For the next step, we will want to expose the minikube api server externally.

To do that we will be using the nginx as reverse proxy to tunnel the traffic to the instance inside docker.

Keep in mind that with this setup we will manage to keep the cluster well contained, as we won’t be exposing other parts of the cluster, and the kube-api is secured through ssh and signed certificates.

So, moving on, after we started the minikube, you can check that minikube created a network on docker, and we will make use of that:

$ docker network list

NETWORK ID NAME DRIVER SCOPE

eae1bce0cdd2 bridge bridge local

23edcaedf34d host host local

bf8bf1be4a17 minikube bridge local

ded619531111 none null local

You can also check that the minikube is running:

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1dbcad6695b5 gcr.io/k8s-minikube/kicbase:v0.0.27 "/usr/local/bin/entr…" 2 hours ago Up 2 hours 127.0.0.1:49157->22/tcp, 127.0.0.1:49156->2376/tcp, 127.0.0.1:49155->5000/tcp, 127.0.0.1:49154->8443/tcp, 127.0.0.1:49153->32443/tcp minikube

From that you can also see that all the services are being exposed locally (bind to 127.0.0.1) and not externally.

We will work on that.

First, create this nginx file somewhere you can easily find it. In my case I created a directory named nginx in my home directory, and for my case, the full path is /home/minikube/nginx/nginx.conf:

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

stream {

server {

listen 8443;

#TCP traffic will be forwarded to the specified server

proxy_pass minikube:8443;

}

}

The important part of this file is the stream rule, that will stream the https content from inside docker minikube instance to outside the vm. One point to note is that it can’t be nested on http. that’s why we’ve used it on nginx.conf file, otherwise, we could have used it on conf.d configuration dir.

The next step will be use docker and nginx to expose the kube api, and we can do that by:

$ docker run --rm -it -d \

-v /home/minikube/nginx/nginx.conf:/etc/nginx/nginx.conf \

-p 8443:8443 \

--network=minikube \

nginx:stable

Check it runs:

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8eae9ba4f231 nginx:stable "/docker-entrypoint.…" 6 seconds ago Up 3 seconds 80/tcp, 0.0.0.0:8443->8443/tcp, :::8443->8443/tcp pensive_germain

1dbcad6695b5 gcr.io/k8s-minikube/kicbase:v0.0.27 "/usr/local/bin/entr…" 2 hours ago Up 2 hours 127.0.0.1:49157->22/tcp, 127.0.0.1:49156->2376/tcp, 127.0.0.1:49155->5000/tcp, 127.0.0.1:49154->8443/tcp, 127.0.0.1:49153->32443/tcp minikube

Right, at this point we have the minikube exposed outside the vm, and we will now get the kubeconfig file to use it with kubectl.

$ scp [email protected]:~/.kube/config ~/.kube/config

Pay attention on the ip address, you should update with the one for your vm.

One more step is to update the address of the kube-api on the config file. Update the config file on the following configuration path: .clusters[0].cluster.server to the address of the vm, and in my case : https://192.168.88.174:8443

And that’s it.

How it works

- 1: the command kubectl will run outsite the network and it will point to the docker service exposed on vm at port 8443, from our previous docker command. One point to keep in mind is that it is binded to any address, so, it will also answer requests from outside, it is similar to the NodePort on kubernetes.

- 2: when the request arrive to the vm, as the docker container exposed the service it will be directed to the container that exposed that port (8443), and that would be nginx.

- keep in mind that in this step we are sharing the same network stack between those two containers, and the minikube can be accessed directly, as the internal name resolution of docker allow to reference the container by it’s name

- 3: in this step, we will stream the initial request to the minikube, and expose the 8443 port that was initially only accessible through the vm

TL;DR:

outside request => vm => directed to container nginx in docker network named minikube => redirect call to minikube container running in same network (docker network named minikube)

And that’s how we get to expose only the kube-api through a secure channel without exposing or breaking the sandbox.

Testing

Try the kubectl command:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

minikube Ready control-plane,master 117m v1.22.2 192.168.49.2 <none> Ubuntu 20.04.2 LTS 4.15.0-161-generic docker://20.10.8

And the cluster-info:

$ kubectl cluster-info

Kubernetes control plane is running at https://192.168.88.174:8443